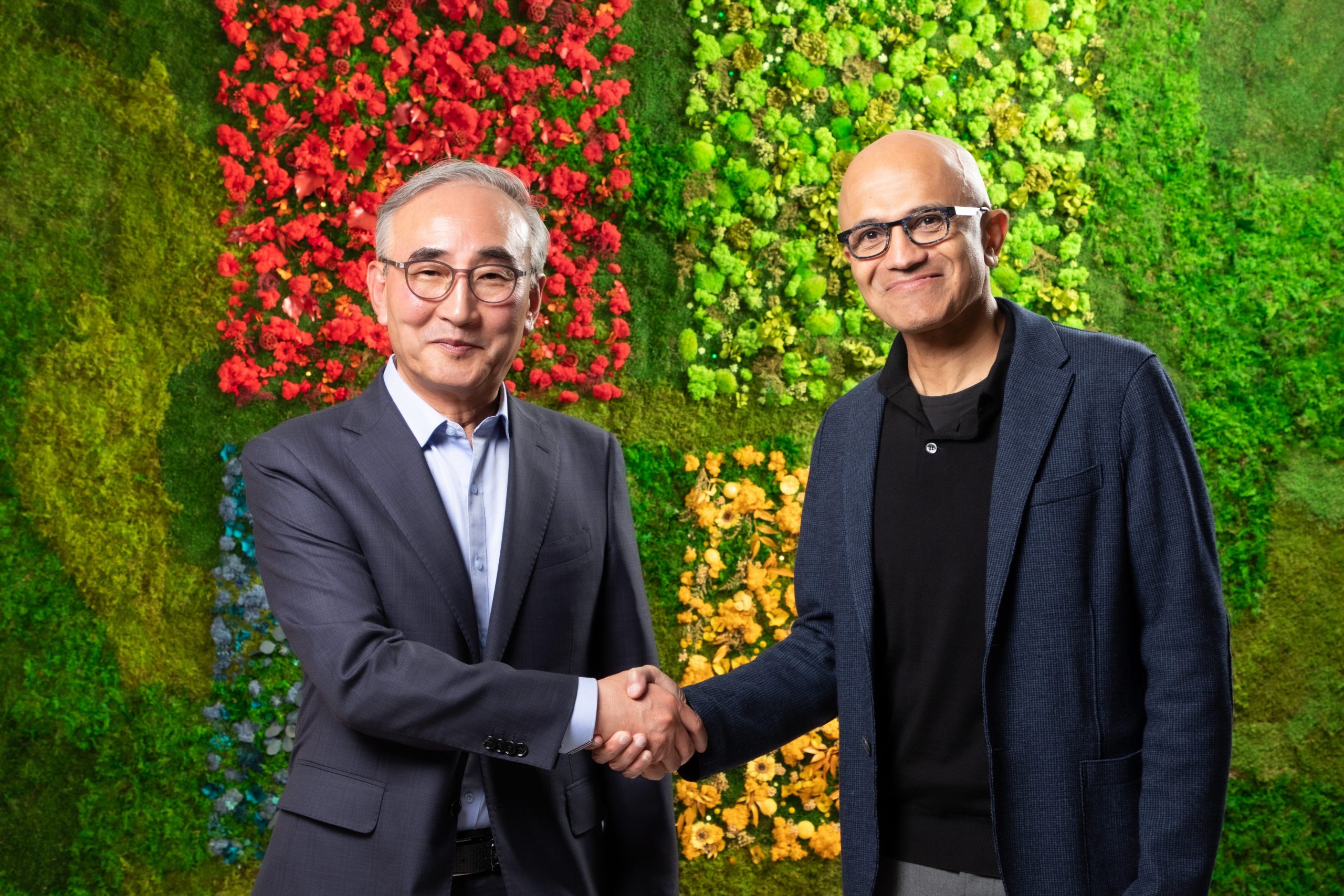

마이크로소프트연구소와 공동연구협력을 위한 프로젝트 모집 공고

목적 마이크로소프트연구소와 공동연구협력 프로젝트* 선정 * 2024년도 「디지털분야글로벌연구지원사업(과학기술정보통신부)」중 ‘기업(연구소)연계형’ 과제로 추진되며, 본 프로젝트는 ‘국가연구개발혁신법 제9조(예고 및 공모 등) 제4항, 동법 시행령 제9조(연구개발과제 및 연구개발기관의 공모 절차)’에 해당함. 운영방향 마이크로소프트연구소에서 선정한 연구주제에 부합하는 창의적 아이디어 공모를 통해 선정 프로젝트 수행시 공동연구협력을 위한 마이크로소프트연구소의 전문가 매칭 공동 연구원* 마이크로소프트연구소에 170일 파견 * 공동연구기관(국내 대학)의 연구원(석박사생) […]

- 목적

마이크로소프트연구소와 공동연구협력 프로젝트* 선정

* 2024년도 「디지털분야글로벌연구지원사업(과학기술정보통신부)」중 ‘기업(연구소)연계형’ 과제로 추진되며, 본 프로젝트는 ‘국가연구개발혁신법 제9조(예고 및 공모 등) 제4항, 동법 시행령 제9조(연구개발과제 및 연구개발기관의 공모 절차)’에 해당함.

- 운영방향

마이크로소프트연구소에서 선정한 연구주제에 부합하는 창의적 아이디어 공모를 통해 선정

프로젝트 수행시 공동연구협력을 위한 마이크로소프트연구소의 전문가 매칭

공동 연구원* 마이크로소프트연구소에 170일 파견

* 공동연구기관(국내 대학)의 연구원(석박사생)

- 과정개요

- 프로젝트 지원

– 지원규모: 총 20억 원*, 15-20개 과제

* 디지털분야글로벌연구지원사업(과학기술정보통신부) 예산 : 20억 원

– 지원분야:

Media Foundation

The Media Foundation theme stands at the cutting edge of integrating multimedia with advanced AI technologies. Our goal is to create foundation models capable of learning from rich, real-world multimedia data, mirroring the observational learning processes of human infants. We place a significant emphasis on visual inputs, recognizing their critical role in comprehending the complexities of real-world dynamics and causality. By pioneering innovative technologies, such as neural codecs, we strive to distill diverse media modalities into succinct, semantic representations. While this research theme encompasses a broad spectrum of research areas within multimedia and AI, we hope the collaboration can discover the essential methodologies for constructing robust media foundation models to learn from and interact with the real world, offering substantial potential for breakthroughs that could significantly influence both academia and industry.

Topics:

- Large Video Foundation Models

- Neural Codecs for Video and Audio

- 2D/3D Video Generation and Editing

- ML Fundamentals for Computer Vision and Speech

Automatic Optimization of LLM Powered Programs

The project aims to investigate and develop novel techniques for the automatic optimization of LLM powered programs such as AI assistants and autonomous agents. These programs are becoming increasingly prevalent and complex, but they also face various challenges to meet real-world requirements, such as user’s quality expectation, resource constraints, and reliability issues. Existing development practices involve tremendous manual efforts, not limited to prompt engineering. The goal is to enhance their performance and reduce the need for manual efforts by applying machine learning (esp. the power of LLMs), program analysis, and system design principles. The expected outcomes include new methods, tools, and benchmarks for evaluating and improving LLM powered programs.

Societal AI

In an era marked by rapid advancements in AI, the impact of these technologies on society encompasses a broad spectrum of challenges and opportunities. To navigate this complex landscape, it is vital to foster an environment of interdisciplinary research. By integrating insights from computer science, social sciences, ethics, law, and other fields, we can develop a more holistic understanding of AI’s societal implications. Our goal is to conduct comprehensive, cross-disciplinary research that ensures the future trajectory of AI development is not only technologically advanced but also ethically sound, legally compliant, and beneficial to society at large. (more information can be found here)

Topics:

- AI safety and value alignment

- LLM evaluation

- Copyright protection in the LLM era

- LLM based social simulation

Reasoning with Large Language Models

Large language models (LLMs) can acquire general knowledge from huge text and code datasets by self-supervised learning and handle various tasks by the “emergent abilities”. However, these models still struggle with tasks which require strong reasoning skills, such as math word problems, competition-level programming problems or physical-world tasks. Motivated by this, we propose reasoning with LLMs as a research theme and call for collaborations with professors/researchers from academic community. The goal is to develop cutting-edge language-centered models with strong reasoning abilities that can solve complex reasoning tasks. We will explore from developing self-enhancement strategy, synthesizing symbolic reasoning-related data, developing/learning from reward models for fine-grained reasoning steps, learning with external tools/environment, and so on. From research perspective, we hope the collaborations can lead to impactful research outcomes like open-source models and papers with state-of-the-art results on latest research-driven leaderboards.

Topics:

- Self-Enhancement Strategy for LLMs

- Symbolic Reasoning-Related Data Synthesis

- Reward Model for Fine-Grained Reasoning Steps

- Learning LLMs with External Tools/Environment

Brain-Inspired AI

Brain-Inspired AI is a burgeoning field that seeks to enhance artificial intelligence by drawing inspiration from biological nervous systems. This research theme encompasses a range of projects that aim to replicate or simulate biological neural network mechanisms and processes in AI models, potentially leading to breakthroughs in energy efficiency, learning capabilities, problem-solving, and understanding of both artificial and natural intelligence.

Topics:

- Neural value alignment between human and AI

- Advancing spiking neural networks for energy-efficient AI

- Enhancing learning and memory consolidation of AI by sleep-like mechanisms

- Brain-inspired lifelong learning or continual learning

- Enhancing computer vision through biomimetic attention

Microsoft Antares: Accelerating Any of Your Workloads using Diverse Modern Hardware

Modern hardware becomes not only stronger but also more diverse, and modern applications become diverse as well for whatever AI / non-AI applications. We are exploring ways to smartly utilize modern hardware to support a wide range of applications, including both AI and non-AI. We are also seeking to identify the best fit between popular workloads and future hardware.

Topics:

- Finding new workloads that have the potential for acceleration.

- Investigating more hardware types for acceleration. (e.g. Vulkan/Gaudi/Qualcomm/GraceHopper/..)

- Investigating elegant approaches to link applications with modern AI system. (e.g. Pytorch/MLIR/..)

- Investigating efficient techniques to optimize kernels for different hardware architectures.

Continual Learning for Large Language Models

Large language models have shown remarkable capabilities in natural language understanding and generation. However, they also face significant challenges in adapting to new domains and tasks, as well as in keeping up with the ever-growing volume and diversity of data. In this project, we aim to develop novel methods to enable dynamic and efficient learning of large language models for various real-world problems around coding, mathematics, and sophisticated infrastructures. We will investigate how to train large language models in an online and incremental manner, how to detect and improve their weaknesses and biases, and how to automate and minimize the human supervision of the learning process.

Topics:

- Dynamic training of large language models

- Detection and improvement of model deficiencies

- Automation and minimal supervision of learning process

Post-Training: Efficient Fine-tuning and Alignment of Large Foundation Models

Post-training is a crucial phase to elicit the full capabilities of large models, improving the reasoning abilities of models and paving the way for the next breakthrough — deep reasoning. Motivated by this, we propose Post-Training as a focused research theme and call for collaborations with professors/researchers from academic community. Our goal is to make a model autonomously determine how to solve a problem given only a prompt. To achieve this, we aim to develop cutting-edge techniques for fine-tuning and aligning LLM/LMM. This includes the implementation of efficient and robust reinforcement learning methods, process-supervised reward modeling, and streamlined search mechanisms for alignment, reasoning, and deep thinking. Our collaborative efforts aspire to yield impactful research papers and attain state-of-the-art results on contemporary research-driven leaderboards.

Topics:

- Efficient reinforcement learning algorithms with self-improving and self-critique skills

- Efficient search and exploring of high-quality reasoning paths

- Advanced deep reasoning capabilities (such as complex math and code)

Data Service in AI Era

To support the rapid growth of AI workloads and applications, AI platforms will be augmented with specialized AI Cloud Services aimed at enhancing cost-efficiency for AI training and inference. Data service is indispensable one. It enhances AI agent with massive knowledge which can be used to train almighty AI model and serve as external memory to support fresh and private data. All these emerging AI applications require powerful and scalable data service. It’s crucial to explore next-generation data services for emerging AI applications and ensure their continuous improvement to keep pace with the rapid growth of AI.

Topics:

- Unified IR Abstraction and Hardware Architecture Abstraction.

- Intelligent Query Execution Planning for new hardware and different data distribution.

- Advanced Indexing technologies for all kinds of hardware and data distribution.

Data-driven AI-assisted Hardware Infrastructure Design

Recent rapid advancements in AI workloads, such as ChatGPT, DALL-E 3 and more, have dramatically accelerated transformation of cloud infrastructure because they lead to remarkably new computational requirements than before. Therefore, there is a strong need and urgency of building novel system tools to scale and speed up AI infrastructure evolution, to improve its performance and efficiency together with AI application advancements. For example, cloud architects need to determine the viability of cluster architecture, chip design and hardware specification of the next-generation AI infrastructure adeptly for future AI models, based on a mixture of diverse objectives such as performance, power, efficiency, cost and more. This project aims to enable AI to co-evolve with AI infrastructure hardware design. We propose that by harnessing the interplay between AI infrastructure and AI, we can consistently generate high-quality data and effective methods for hardware performance prediction. This will aid cloud architects in accurately forecasting the performance trends of AI workloads on new hardware specifications, even without physical hardware access. The scope of this project encompasses performance predictions for cloud architecture and chip micro-architecture, as well as identifying hardware component requirements for targeted AI workloads. Based on our hardware copilot’s assessment, architects can make informed and unbiased decisions regarding new hardware specification requests to hardware vendors.

Topics:

- Data-driven High-fidelity Fast Microarchitecture Simulator

- Data-driven AI-assisted Microarchitecture Hyperparameter Searcher

Small Generative Models for Video

In the rapidly advancing field of machine intelligence, significant progress has been made in language models. However, genuine human-like intelligence arises from a diverse array of signals perceived by auditory, visual, and other sensory systems. To capture the richness of human understanding, our objective is to propel machine intelligence into new dimensions by enabling machines to learn from videos. Adhering to Richard P. Feynman’s profound insight, “What I cannot generate, I do not understand,” we believe that mastering the intricacies of video generation will provide machines with a more comprehensive understanding of the world. Our primary focus involves delving into this domain using small generative models and investigating diverse applications, particularly in the realm of video editing. We hope the collaborations can lead to influential research papers or innovative prototypes.

Topics:

- Text to Video Generation with Diffusion Models

- Video Understanding and Generation with Small Language Models

- Generative Video Editing

LLM-powered Decision-Making

The intersection of advanced natural language processing and decision science holds vast potential for advancing how artificial intelligence systems can support and enhance human decision-making processes. We are on the cusp of a new era where large language models (LLMs) can be leveraged to interpret complex data, predict outcomes, and guide choices in sequential decision-making tasks. With this vision in mind, we are reaching out to invite collaboration with esteemed professors and researchers from the academic community interested in the exploration of LLMs within the domain of sequential decision making. Our aim is to pioneer research that harnesses the capabilities of LLMs to tackle challenges in dynamic environments that require a sequence of decisions over time. This encompasses areas such as strategic planning, resource allocation, adaptive learning and beyond. We believe that through collaborative efforts, we can create groundbreaking models that not only understand and generate human-like text but can also reason, plan, and make decisions based on complex and dynamic inputs. We are particularly interested in collaborative projects that can lead to substantial research publications or to foster innovative solutions with transformative impacts on real-world applications. We aim at exploring integration of LLMs with Reinforcement Learning to achieve sampling efficient policy learning, generalizable and robust policy learning,sequential decision making under uncertain and dynamic contextsBrain-Inspired AI is a burgeoning field that seeks to enhance artificial intelligence by drawing inspiration from biological nervous systems. This research theme encompasses a range of projects that aim to replicate or simulate biological neural network mechanisms and processes in AI models, potentially leading to breakthroughs in energy efficiency, learning capabilities, problem-solving, and understanding of both artificial and natural intelligence.

Foundation Model for Vision-based Embodied AI

Recent advancements in embodied AI have sparked interest in developing a learning foundation model that could lead to breakthroughs in the field. Motivated by this, we propose foundation model for vision-based embodied AI as a research theme and call for collaborations with professors/researchers from academic community. The goal is to learn foundation models from scratch based on large-scale ego-centric embodied AI video data. By extracting commonsense knowledge from large-scale real-world data, the learned foundation model should be easily adaptable to various downstream tasks with limited demonstrations, and significantly improve performance. From research perspective, we hope the collaborations can lead to impactful research papers or achieve state-of-the-art results on latest research-driven leaderboards.

LLM on Large Scale Datacenters: Reassessing Scalability

The prevailing notion is that data centers are inherently scalable in design. Yet, with the expansion of data centers, we encounter a notable paradox: numerous resources within these centers prove to be non-scalable. A prime example is the escalating complexity in managing routing configurations. Each update in configuration precipitates substantial alterations in routing entries, thereby straining the capacities of the involved devices. In light of these challenges, the proactive application of current Large Language Models (LLMs) to predict the impacts of such configurations on each data center resource is becoming increasingly vital. This study seeks to bridge the gap between academic research and industry practice, focusing on solving critical resource issues in data centers, including IP allocation and routing table entry strategy optimization.

Topics:

- AI/LLM Applications in Networking

- Scaling Network Resources in Cloud Data Centers

SmartSwitch: Advancing Intelligent Data Centers with Programmable Hardware

We are witnessing an increasing trend of integrating smart, programmable hardware into the fabric of intelligent data centers. Microsoft’s forthcoming SmartSwitch, which employs a combination of NPU (Network Processing Unit) and DPU (Data Processing Unit) architectures, is currently in its early research stage. This technology is set to significantly boost cloud service performance at various levels, particularly in reducing latency. Beyond basic cloud functionalities, our intention, in partnership with the academic sector, is to delve deeper into the broader applications of such hardware. This exploration is not limited to, but includes AI training and inference, intelligent layer-7 firewalls, storage, and load balancers. Our aim is to jointly advance the widespread design and deployment of programmable hardware in data centers, thereby tackling practical challenges and expanding the realm of possibilities in data center operations.

Topics:

- Next-Generation Hardware in Data Centers

- Enhancing Applications with Advanced Hardware

- Improving Networking Efficiency through Innovative Hardware Solutions

AI Infrastructure in the Era of Large Models

In the current era of large-scale models, the significance of AI infrastructure has greatly increased. However, the limited availability of hardware and network resources is becoming a critical bottleneck in the development of AI infrastructure. In this collaboration, our focus is to jointly explore with the academic community how to effectively develop and apply new technologies, such as In-network Aggregation and DPU All-to-all engines, to improve hardware and network efficiency. This initiative aims to minimize resource wastage and expedite the training and inference processes, with the ultimate goal of deploying these technologies in real-world systems.

Topics:

- Next-Generation AI Infrastructure

- Optimizing Resources in AI Infrastructure

Naturally Parallel Network Simulator

Simulation is an essential way of evaluating data center network designs as running real large data center scale workload cost too much. However, state-of-the-art discrete-event network simulators (DES) fail to simulate data center scale network as the scale can be up to 100K nodes and 400 Gbps link rate. DESs are 10s-100s thousands of times slower than real time to simulate a modern 1000-node level data center network. Such long simulation time makes it impractical to simulate large scale network. The discrete event simulator architecture is naturally contradictory to parallelism as it needs a centralized coordinator to calculate the next event. Although spatially divide network topology to multiple partitions can enable parallelism at some degree, the coordination between partitions is also high. We want to build network simulators that are naturally paralleled. The intuition is we just let data center nodes (endpoints, switches, and links) run at individual thread. The key problem we need to solve is how to design an efficient way to synchronize simulation time of each node (thread).

Topics:

- Feasibility and potential gain of naturally parallel network simulator architecture.

- Efficient network modeling and programming for network simulator.

- Hardware acceleration for even higher efficiency and parallelism.

Nextgen AI Networking

As AI model training continues to scale up, cost-effective and efficient networking becomes significantly crucial. Larger language models increasingly necessitate extensive AI infrastructures, often involving clusters of thousands or even tens of thousands of GPUs. With such voluminous models come equally vast clusters, larger network messages, and consequential underperformance when networks falter. Therefore, the imperative lies in creating a networking interface for these computational resources that is efficient, cost-effective, and aptly evolved to match the higher performance demands, scale, and bandwidth of future networks. This necessity gives rise to a myriad of focus areas; multipath routing, flexible delivery order, and more sophisticated congestion control mechanisms, amongst others. Consequently, we propose “Next-Generation AI Networking” as a pivotal research theme and welcome collaborative partnerships with professors and researchers from the academic community. Our goal is to thoroughly understand the implications of large-scale AI on networking and design comprehensive network protocols tailored for future AI infrastructures. Straying from the traditional networking research methodology, we endeavor to embed AI technology into our protocol designs. We anticipate that these collaborations will yield impactful research papers and contribute to state-of-the-art results on recent research-driven leaderboards.

Topics:

- AI workload characteristics and critic metrics: We aim to offer the network researchers reliable benchmarks at transport/network/link layers, derived from an in-depth analysis of prevalent MPI libraries.

- Network challenges in future AI infrastructure: We plan to delve deeper into the potential challenges that may arise in different network layers as AI cluster scales grow. Our focus will be on analyzing the strengths and weaknesses of existing solutions and offering new insights.

- AI-assistant network protocols: We aim to optimize the latest AI technology in integrating all insights to adapt to the rapidly growing needs of future AI infrastructure.

Embodied AI

Recent advances of LLM and Large Vision-Language Models bring new paradigm and great opportunities to embodied AI and robotics. Motivated by this, we propose embodied AI as a research theme and call for collaborations with professors/researchers from academic community. The ultimate goal is to figure out and solve the key problems for developing the embodied AI foundation models that can demonstrate the good generalizability and capability for embodied AI tasks as the GPT-4 does for language tasks. From research perspective, we hope the collaborations can lead to key techniques, representations, and algorithms for in embodied AI research, as well as a benchmark platform for people to evaluate the performance of different embodied AI models.

Topics:

- Vision-Language-Action Model

- Vision-Language-Action Data Generation and Model Benchmark

- Generalized Vision and Action Latent Representation for Embodied AI

- Any Other Topics That are Related to Key Challenges in Embodied AI

Mobile Sensing for Healthcare

The proliferation of wearable technology, such as smart watches, smart glasses, earphones, presents an unprecedented opportunity to revolutionize healthcare through ubiquitous monitoring. This project aims to develop novel mobile sensing technologies designed to provide continuous health assessment and early detection of physiological anomalies. Leveraging state-of-the-art sensors, machine learning algorithms, and wireless communication, our technology aims to capture vital health information, including heart beat, respiration, brain wave, eye movement, and body activities. Meanwhile, we plan to further integrate different sources of information to get a comprehensive picture of an individual’s health status in real-time. The proposed sensing technology will be discreet, non-invasive, and power-efficient, ensuring minimal impact on the user’s daily routine while maximizing device longevity. By harnessing the widespread use of personal wearable devices, this platform will facilitate personalized health alerts, enable remote patient monitoring, and empower individuals with actionable insights into their well-being. Furthermore, the aggregation of data across diverse populations can enhance predictive analytics, contributing to a deeper understanding of public health trends and the advancement of preventive medicine.

Topics:

- Wireless sensing

- Wearable

- Signal processing

- Machine learning

LLM-based Mobile Agents

The advent of large language models (LLMs) has revolutionized natural language understanding and generation, with wide-ranging applications across various domains. However, their deployment on mobile devices presents unique challenges due to computational, storage, and energy constraints. This proposal aims to develop a novel framework that enables the efficient use of LLMs for intelligent task planning and execution directly on mobile devices, such as smartphones, smartwatches, and earphones. Our approach consists of three core objectives: 1) to optimize LLMs for mobile deployment, leveraging model distillation and quantization techniques to reduce model size and computational overhead without significant loss of performance; 2) to harness the capabilities of LLMs for sophisticated task planning, enabling mobile devices to understand complex user queries and generate actionable, context-aware plans; and 3) to architect a coordination mechanism that manages task execution across a distributed network of personal devices, ensuring synchronization and seamless user experience. To address these challenges, the proposed research will explore lightweight LLM architectures, edge computing paradigms, and cross-device communication protocols. By integrating LLMs with mobile technologies, we aim to create a pervasive, intelligent assistant that assists users in day-to-day activities, enhances productivity, and adapts to the dynamic nature of mobile contexts. The outcome of this work will not only push the boundaries of mobile computing but also pave the way for new applications of AI in personal and professional spheres, where real-time, on-device intelligence becomes a reality.

Topics:

- LLM

- Edge AI

- Task planning

Generative AI for Drug Discovery and Design

Drug discovery and design (DDD) is an extremely complex, time-consuming, and expensive process. Incentivized by recent successes in various applications such as computer vision, natural language understanding and generation, speech synthesis and recognition, and game playing, Generative AI (GenAI) has attracted more and more attention in DDD, revolutionizing the design-make-test-analyze (DMTA) cycle, accelerating the drug design process and therefore reducing cost. In this theme, we encourage researchers to collaborate and tackle important challenges in DDD. We welcome research proposals which aim to improve the DDD process by leveraging recent GenAI techniques.

Topics:

- GenAI for structure-based drug design

- GenAI for lead optimization

- GenAI for retrosynthesis

- GenAI for natural product synthesis

- GenAI for protein design

- GenAI for protein optimization

- Molecular docking

Data-driven Optimization

Combinatorial online learning with focus on dynamic and uncertain changes in the systems. Combinatorial online learning is at the intersection between the traditional combinatorial optimization and online learning. It needs to address how to adaptively learn the underlying parameters of some model in the uncertain and noisy environment, with the goal to achieve the best possible performance on some combinatorial optimization tasks. The theme of current proposed topic focus on the dynamic changes in the setting of combinatorial online learning. In particular, as the learning process proceeds, the learning agent may obtain a better knowledge about the underlying system and thus in general would perform better, obtaining more expected reward as the learning goes. This falls into the framework of rising bandits in the literature. However, there is not much research on how to combine rising bandit with combinatorial multi-armed bandit (CMAB) framework. This project theme aims at filling this gap, and provide a systematic study on combinatorial rising bandits. It has potential applications in traffic routing, wireless networking, social and information networks, etc.

– 지원기간: 2024.5.1 – 2025.4.30

– 지원내용: 프로젝트경비 (기업부문: USD10K와 정부부문: Korea Won 80-130M) – 총 9천 – 1억 5천만원

* 정부부문의 프로젝트비 산정. 사용 등은 정보통신방송 연구개발 관리규정에 따름 (IITP의 추후 안내)

* 기업부문은 기업과제 별도 계약에 따름.

– 선정심사: 마이크로소프트연구소의 전문성심사(서면)

* 심사결과는 선정된 과제에 한하여 개별 통보하며 공개되지 않음

* 과제 공동연구기관으로 선정된 국내 대학은 IITP와 협약 등 정부 과제 수행을 위한 절차를 추진해야 함(대학별로 공동연구기관으로 협약 체결)

- 공동연구원 파견

– 공동 연구원: 별도 심사를 통해 선발

– 파견기간: 170일(2024. 9월 – 2025. 3월 예정)

– 파견기관: 마이크로소프트연구소 (연구 분야에 따라 중국 북경, 상해, 기타 지역 등에 파견 예정)

- 신청자격

프로젝트당 학생(2-5명) 및 지도교수로 팀을 구성

학생: 국내 IT관련학과 대학원에 재학중인 전일제 석박사과정 대학원생

* 한국 국적의 내국인 (휴학생 또는 박사후 과정은 제외)

교수: 국내 IT관련학과 소속 전임교원으로서 지원기간 동안 프로젝트 총괄 및 학생 연구 지도가 가능한 자

- 지원절차

프로젝트 선정 공고 -> 제안서 제출(온라인 접수, 지원양식, 100% 영문제안) -> 선정심사 -> 지원대상 선정통보 -> 협약체결 및 프로젝트 경비 지급

* 지원양식에서 예산작성은 기업부문 USD10K 기준으로 작성. 정부부문의 프로젝트비는 선정통보후 별도 안내 예정

- 신청 유의사항

프로젝트팀은 총1개 분야에 한해 신청할 수 있음

신청자격에 부합하지 않을 경우 선정심사 대상에서 제외될 수 있음

* 국가연구개발사업에 참여제한 중인 자와 기관은 신청 불가

- 신청요령

신청방법: 이메일 신청([email protected])

신청접수마감: 2024년 3월 15일(금) 17:00

* 제출된 서류는 일제 반환되지 않음

- 문의처

사업담당: 마이크로소프트연구소 이미란 전무 (010-3600-4226, [email protected])

![[Phocus] 사용자 경험 중심 스벨트 이미지 뷰어 라이브러리 / 제5회 Kakao Tech Meet](https://tech.kakao.com/storage/2024/04/jordan.png)